Friday, 7 November 2014

The non-immigrant peril

Finally, in Britain's increasingly bitter and mean-minded grouchfest about immigration, someone has written something which doesn't just recognise that immigrants are human beings; it is also funny.

Friday, 24 October 2014

Aphrodisiacs, fertility and medicine

It's a particular pleasure to see Jennifer Evans' new book, Aphrodisiacs, Fertility and Medicine in Early Modern England, hit the newsstands:

If you really want to read a blog post about this book, you're probably better off reading one of hers, but my own stake in this was that I was the advisory editor for the RHS Studies in History series who worked with her on the book, and so I feel a certain avuncular pride in it.

With a title like that, it'll certainly sell (wait till you see the pictures). But the book has a deadly serious historical point. Modern scholars have been almost unable to write on early modern aphrodisiacs without being consumed by titillation (cheered on by publishers, naturally). At best, we think of them as part of what Faramerz Dabhoiwala calls the first sexual revolution. Jennifer's argument is that this fundamentally misses the point. Artificial stimulants to sexual desire were, in the early modern period, not solely or even primarily a means to debauchery or sensory gratification. They were a means to fertility, a human function whose connection to sex we are sometimes liable to forget. Infertility and subfertility were at least as sharply painful in the early modern period as they are today, and much harder to treat. But, unusually sensibly by early modern medical standards, the truism was that stimulating sexual desire would assist conception. Unlike plenty of other contemporary medical theories, this was, as far as it went, harmless. And perhaps a little better.

Jennifer's book carefully traces the uses of aphrodisiacs to treat fertility problems across the period, which is a useful corrective in itself, but I think also raises some more profound issues about the acculturation of sexual desire. It seems pretty clear to me from some of her sources that, in this period, fertility was itself desirable, and fertile sex was sexy sex. The modern world, which has for excellent reasons concluded that fertility and sexual fulfilment are almost in opposition to one another, is in a very different place.

In other words, this book manages the trick every good historian aims at: to make the past seem both familiar, intimately familiar in this case, and also very alien indeed. I recommend it.

If you really want to read a blog post about this book, you're probably better off reading one of hers, but my own stake in this was that I was the advisory editor for the RHS Studies in History series who worked with her on the book, and so I feel a certain avuncular pride in it.

With a title like that, it'll certainly sell (wait till you see the pictures). But the book has a deadly serious historical point. Modern scholars have been almost unable to write on early modern aphrodisiacs without being consumed by titillation (cheered on by publishers, naturally). At best, we think of them as part of what Faramerz Dabhoiwala calls the first sexual revolution. Jennifer's argument is that this fundamentally misses the point. Artificial stimulants to sexual desire were, in the early modern period, not solely or even primarily a means to debauchery or sensory gratification. They were a means to fertility, a human function whose connection to sex we are sometimes liable to forget. Infertility and subfertility were at least as sharply painful in the early modern period as they are today, and much harder to treat. But, unusually sensibly by early modern medical standards, the truism was that stimulating sexual desire would assist conception. Unlike plenty of other contemporary medical theories, this was, as far as it went, harmless. And perhaps a little better.

Jennifer's book carefully traces the uses of aphrodisiacs to treat fertility problems across the period, which is a useful corrective in itself, but I think also raises some more profound issues about the acculturation of sexual desire. It seems pretty clear to me from some of her sources that, in this period, fertility was itself desirable, and fertile sex was sexy sex. The modern world, which has for excellent reasons concluded that fertility and sexual fulfilment are almost in opposition to one another, is in a very different place.

In other words, this book manages the trick every good historian aims at: to make the past seem both familiar, intimately familiar in this case, and also very alien indeed. I recommend it.

Tuesday, 21 October 2014

Despatches from New Orleans

The Sixteenth Century Conference, this year in New Orleans,

was as fun and stimulating as ever. The expected highlights included a terrific

panel on scepticism featuring Peter Marshall, Alex Walsham and Phil Soergel. But

the real fun is always to hear cutting-edge stuff from more junior people, so

here’s my personal selection.

I thought there was nothing more to be said about this

(unless someone should discover something specific about how, when and by who

it was created). But Brad, amongst other things, pointed out something which is

obvious once he said it. Look again at the bottom third, the common people

gratefully receiving the king’s gift of God’s word:

No books! Not even the preacher has one! The Word does go to

the lay elite as well as to the bishops, but it stops there. It’s not just that

the common people are only supposed to learn one thing from the Word, namely,

long live the king. Just to ensure they get the message, they aren’t allowed

actually to see it. In 1543 Henry VIII restricted the common people’s access to

the Bible by law, to great outrage: but look, back in 1539 he as good as told

them he was going to!

Amy Blakeway from Cambridge, who is positioning herself to

be the bad girl of sixteenth-century Scottish history, did a lovely piece on

the administrative structure of Scottish government in the 1530s. Stay with me.

It’s partly that she can prove that King James V had a sort of official council

when we didn’t know that before, and that’s just quite an impressive thing to

discover. But it matters because Scottish institutions were wonderfully ad hoc

and pragmatic, and it’s nice to see one swimming into existence in that way.

James used it, apparently, to deal with the fact that he was always on the

move, but he needed some sort of permanent executive in Edinburgh. And, as a

bonus, he also used it the way bureaucracies are always used: to fob off

unwelcome visitors. English correspondents who were trying subtly to denigrate

James by implying that Henry VIII was his overlord got directed into a

bureaucratic slow lane that seems to have been created specially for the

purpose.

Brad Pardue, from the College of the Ozarks, gave a paper in

a lamentably poorly attended session on the 1539 Great Bible title page, one of

the best-known images in Tudor history:

But for me, the paper of the conference was from Jon Reimer,

also from Cambridge: a PhD student working on an old friend of mine, the

bestselling and shamelessly self-publicising Protestant polemicist Thomas

Becon. I thought I had ‘done’ Becon’s early works. Jon, however, has used the

dedications in those books as the basis of some really detailed, impressively

careful detective work, and managed to conjure up a whole network of Kentish

aristocrats who were supporting Becon – even if some of them, especially the

older generation, didn’t seem actually to agree with him very much. He’s taken

a broad-brush picture that we used to have and given us some gorgeously

specific detail, and in the process opened up a whole network of printers,

gentry and preachers working together in a messy, pragmatic way. This is

dirty-fingers history the way it ought to be done: I can’t wait to see the PhD.

Wednesday, 1 October 2014

Journal of Ecclesiastical History

I've recently taken over as one of the editors of this wonderful journal, which at the same time is an alarming responsibility, a significant workload, a source of endless fascination and - thanks to the rest of the editorial and office team - a consistent delight.

Lots of things going on with it: in particular, the Journal's remit is the whole of the history of Christianity, but it has traditionally been centred on some specific areas and periods, and we're trying to broaden the range, especially beyond the North Atlantic world.

But I mention it today because the October issue is just out (vol. 65 no. 4, for those who are counting): and, in what I hope will become a regular service, I want to flag up a highlight or two from it.

Of course all the articles are excellent (we don't publish anything else). But the one I want arbitrarily to highlight is by Hector Avalos, a campaigning and controversial figure in American religious studies: you don't write a book called The End of Biblical Studies if your main intention is to make everyone like you. His article in this issue of the Journal is characteristically combative. The subject is a precise and momentous one: what exactly did that monument to Christian virtue, Pope Alexander VI, say in the 1490s about the right of the Spanish and Portuguese conquistadors to enslave the native peoples in the lands they discovered? One text in particular, the 1497 papal letter Ineffabilis et summi patris, has been cited to exculpate the papacy on this point. It has been read to imply that the Pope only allowed for indigenous peoples to be subjected or enslaved if they somehow voluntarily submitted themselves. Such a provision would of course have been completely ineffectual, but it fits well with a narrative that tries to distance the Church from responsibility for colonial atrocities.

That's not a narrative which Avalos instinctively likes, and this claim has roused him to do a terrific piece of scholarly detective work. The article is a precise and, to my eyes, devastating attack on this reading of Ineffabilis. Once he has translated the whole thing (not simply cherry-picked sections), and correctly identified the individuals involved and the political context within which they were operating, the attempt to defend the papacy's role vanishes like a mist. The letter was more about jockeying for position between the Spanish and Portuguese crowns, and in particular the Portuguese attempting to test the limits of the Tordesillas settlement which had excluded them from the western hemisphere. Who was allowed to enslave which heathen peoples was hotly disputed. The fact that Christians could and should enslave them was not in doubt.

Lots of things going on with it: in particular, the Journal's remit is the whole of the history of Christianity, but it has traditionally been centred on some specific areas and periods, and we're trying to broaden the range, especially beyond the North Atlantic world.

But I mention it today because the October issue is just out (vol. 65 no. 4, for those who are counting): and, in what I hope will become a regular service, I want to flag up a highlight or two from it.

Of course all the articles are excellent (we don't publish anything else). But the one I want arbitrarily to highlight is by Hector Avalos, a campaigning and controversial figure in American religious studies: you don't write a book called The End of Biblical Studies if your main intention is to make everyone like you. His article in this issue of the Journal is characteristically combative. The subject is a precise and momentous one: what exactly did that monument to Christian virtue, Pope Alexander VI, say in the 1490s about the right of the Spanish and Portuguese conquistadors to enslave the native peoples in the lands they discovered? One text in particular, the 1497 papal letter Ineffabilis et summi patris, has been cited to exculpate the papacy on this point. It has been read to imply that the Pope only allowed for indigenous peoples to be subjected or enslaved if they somehow voluntarily submitted themselves. Such a provision would of course have been completely ineffectual, but it fits well with a narrative that tries to distance the Church from responsibility for colonial atrocities.

That's not a narrative which Avalos instinctively likes, and this claim has roused him to do a terrific piece of scholarly detective work. The article is a precise and, to my eyes, devastating attack on this reading of Ineffabilis. Once he has translated the whole thing (not simply cherry-picked sections), and correctly identified the individuals involved and the political context within which they were operating, the attempt to defend the papacy's role vanishes like a mist. The letter was more about jockeying for position between the Spanish and Portuguese crowns, and in particular the Portuguese attempting to test the limits of the Tordesillas settlement which had excluded them from the western hemisphere. Who was allowed to enslave which heathen peoples was hotly disputed. The fact that Christians could and should enslave them was not in doubt.

Monday, 22 September 2014

Reformation Studies 2014

A belated report from the Reformation Studies Colloquium in Murray Edwards

College, Cambridge (or, as I still instinctively call it, New Hall). I believe

I should, for search-engine purposes, add the tag #RefStud.

I don’t think I heard a dud paper, but three of those that I

heard stand out.

I am a longstanding fan of Kate Narveson, whose lovely paper

on the emotional salience of the doctrine of assurance in English Protestantism

had a warmth all too rare in histories of religion and theology. Once again,

Kate demonstrates her humane yet rigorous engagement with her subjects. There

aren’t many people out there who I’d rather hear speak about Puritan culture:

and I don’t say that solely because this was a version of a piece due to be

published in a forthcoming book on Puritanism and the emotions edited by Tom

Schwanda and myself.

At ERRG, the ‘pre-conference’ for graduate students, I

enjoyed a wonderful paper by David de Boer, a first-year doctoral student at the

University of Constanz, whose description of Catholic efforts to rescue both

images and relics (and to turn images into relics) during the Protestant

iconoclasm in the Netherlands in the 1570s was one of the stand-outs of the

conference. The issues are horribly complex: David untangled them beautifully.

One to watch.

But for me, the stand-out paper of the entire

conference (and, thanks to the curse of parallel sessions, I missed lots of

them) was from Neil Younger, whom I taught as an undergraduate many years ago.

Neil’s account of Christopher Hatton, who was Lord Chancellor under Elizabeth

I, was revelatory: we always knew that Hatton was an antipuritan, but the

extent of his unmistakably Catholic connections and patronage, including

individuals involved in ummistakable plots against the queen, has not I think

been revealed like this before. As a glimpse of the ambiguities of the

Elizabethan regime and of the compromises forced on all sorts of individuals

compelled to be a part of it, this was new to me. I hope we see it in print

soon.

Friday, 19 September 2014

The UK: the gift that can keep on giving

In my Panglossian way, I want to cheer an ideal result from

the Scottish referendum.

Having spent numerous nights awake worrying about this, I am personally delighted by the result, not because of all the ephemeral fluff about currencies, prices and economies, but because my own instinctive national identity – ‘British’ – has not been voted out of existence.

But I am also glad that it was fairly close; and particularly glad about the promises made about Scottish ‘home rule’ in the campaign’s closing days, promises which must now be fulfilled, and which seem to me to take account of the fact that this minority nation in the British confederation has, for the present at least, a markedly distinct political culture.

That will mean justice for Scotland, and will I think reflect what John Smith used to call the settled will of the Scottish people.

It might be a pathway to full independence at the second referendum which surely will come in due course: though my own private theory, on which I will blog at a calmer moment, is that this is neither the much-threatenened ‘neverenedum’ nor the much-claimed once-in-a-lifetime opportunity, but rather a twice-in-a-lifetime opportunity, which won’t recede unless and until it is voted down a second time.

But I also think devo-max would mean justice for the English, who will now be forced to recognise that the effortless imperialism of their / our system, and its easygoing centralism and self-satisfaction, is unsustainable. (A 65/35 vote, by contrast, would have entrenched it.) The political upheaval which now beckons south of the Border is delicious to contemplate,

English politics is not corrupt in the classic sense: it is neither seriously venal nor actively murderous, which by either global or British standards makes it unusual. I fear, given the record of pre-Union Scotland and post-Union Ireland, that both of those temptations might have awaited a newly independent Scottish nation.

But if England’s historic contribution to the Union has been due process and the rule of law, Scotland’s contribution has been bloodyminded awkwardness, libertarianism and a willingness to face down oppressive systems no matter how historic their garb. Scotland is the country where tyrants are deposed whatever their tame parliaments say (hello, Mary Stuart), assassinated whatever oppressive splendour they have gathered (hello, James I and James III of Scots), or despised regardless of the powers they have officially accumulated (goodbye, Georges I-IV inclusive).

Each one needs the other. But in the present era, England needs Scotland’s culture more than Scotland needs England’s. What makes Britain worth persisting with is that mixture of order and rebellion: and we are, at present, more likely to be too quiescent than too rebellious.

So here’s the September 19 deal. Scotland remains part of the Union, for the present. But, sometime in the next decade, the UK as a whole will almost certainly vote on whether to remain in the EU or not. English voters (the Northern Irish and Welsh are almost spectators here) need to realise that if they vote the UK out, Scotland will very quickly vote to remain in Europe rather than in the UK.

Which is to say: if everything works out as I hope, the September 18 vote could save Scotland, England and indeed Europe as a whole from the petty-minded, sectional horrors that might otherwise confront them. If you voted no, feel proud. If you voted yes, feel proud too: you have shown the world that a country, Scotland, which values both absolute freedom and the rule of law is not to be taken for granted.

Having spent numerous nights awake worrying about this, I am personally delighted by the result, not because of all the ephemeral fluff about currencies, prices and economies, but because my own instinctive national identity – ‘British’ – has not been voted out of existence.

But I am also glad that it was fairly close; and particularly glad about the promises made about Scottish ‘home rule’ in the campaign’s closing days, promises which must now be fulfilled, and which seem to me to take account of the fact that this minority nation in the British confederation has, for the present at least, a markedly distinct political culture.

That will mean justice for Scotland, and will I think reflect what John Smith used to call the settled will of the Scottish people.

It might be a pathway to full independence at the second referendum which surely will come in due course: though my own private theory, on which I will blog at a calmer moment, is that this is neither the much-threatenened ‘neverenedum’ nor the much-claimed once-in-a-lifetime opportunity, but rather a twice-in-a-lifetime opportunity, which won’t recede unless and until it is voted down a second time.

But I also think devo-max would mean justice for the English, who will now be forced to recognise that the effortless imperialism of their / our system, and its easygoing centralism and self-satisfaction, is unsustainable. (A 65/35 vote, by contrast, would have entrenched it.) The political upheaval which now beckons south of the Border is delicious to contemplate,

English politics is not corrupt in the classic sense: it is neither seriously venal nor actively murderous, which by either global or British standards makes it unusual. I fear, given the record of pre-Union Scotland and post-Union Ireland, that both of those temptations might have awaited a newly independent Scottish nation.

But if England’s historic contribution to the Union has been due process and the rule of law, Scotland’s contribution has been bloodyminded awkwardness, libertarianism and a willingness to face down oppressive systems no matter how historic their garb. Scotland is the country where tyrants are deposed whatever their tame parliaments say (hello, Mary Stuart), assassinated whatever oppressive splendour they have gathered (hello, James I and James III of Scots), or despised regardless of the powers they have officially accumulated (goodbye, Georges I-IV inclusive).

Each one needs the other. But in the present era, England needs Scotland’s culture more than Scotland needs England’s. What makes Britain worth persisting with is that mixture of order and rebellion: and we are, at present, more likely to be too quiescent than too rebellious.

So here’s the September 19 deal. Scotland remains part of the Union, for the present. But, sometime in the next decade, the UK as a whole will almost certainly vote on whether to remain in the EU or not. English voters (the Northern Irish and Welsh are almost spectators here) need to realise that if they vote the UK out, Scotland will very quickly vote to remain in Europe rather than in the UK.

Which is to say: if everything works out as I hope, the September 18 vote could save Scotland, England and indeed Europe as a whole from the petty-minded, sectional horrors that might otherwise confront them. If you voted no, feel proud. If you voted yes, feel proud too: you have shown the world that a country, Scotland, which values both absolute freedom and the rule of law is not to be taken for granted.

And if, like me and millions of other Scottish Brits and

British Scots, you couldn’t vote this time, be patient. Your time will come.

Thursday, 28 August 2014

Delenda est laptops

I read this screed against laptop use in classes with initial scepticism and then increasing belief. It goes against my generally nonconfrontational approach to life but I think I might give it a go.

Imagining Christianity

Fresh from a summer holiday, my rare annual chance to read the odd novel, and to this year's discovery: Maria McCann's The Wilding, set in 1670s Dorset, in which a young man who believes himself to be an upstanding citizen progressively uncovers a whole regiment of skeletons in the family closet and realises that he is simply sheltered and naïve rather than virtuous.

It isn't the perfect novel. I did find that the ending fizzled a little. But it's a gripping read. McCann writes both beautifully and compellingly, which is not an easy double. The characters are appealing, particularly the young hero - who, by the end, is considerably more heroic than he himself recognises - and his parents, who you can't help but love as much as he does. It does the historical-novel thing unusually well: it captures lots of atmosphere and subtle detail, and much of the plot turns on one crucial incident from the Civil War, but most of it is as timeless as most human lives are. There are no attempts to dress a history lesson up as fiction, and no walk-on parts from big-name characters. And I challenge you to read it without thinking that you'd rather like a mug of cider.

Still, my point is not simply to plug it but to pick out a more unusual virtue. I think McCann manages to get her hero's religion right. He's not a particularly pious young man, but his character is underpinned by the assumed religious framework that pervades his life, from which he draws considerable strength, and which - briefly, at one pivotal moment - he openly questions. She doesn't draw attention to it very often. It simply seems normal, and it adds to his humanity.

Is it just me, or is successfully pulling this off in a historical novel really rare? I have read a series of historical novels recently, all of which I have enjoyed, all of which fall down on this point. Geraldine Brooks' Year of Wonders, another rollicking post-Civil-War read, ends with the heroine sloughing off her religion. Jill Paton Walsh's Knowledge of Angels, a much better book, is about the struggle and inevitable failure of a cleric to hold onto his faith when confronted with an alternative. Even the incomparable Wolf Hall and Bring Up the Bodies present Thomas Cromwell's religion as a species of modern secular scepticism.

I was beginning to wonder if the novel, as a genre, is capable of dealing effectively with characters who genuinely hold to and draw strength from their religion. Or is there a cult of individualism so deeply ingrained in it as a form that we can only construe adherence to truths beyond the self as a form of oppression? I struggle to think of many modern novels whose characters' religion is simply part of them (aside from those like Susan Howatch's Starbridge series, where that is the point). So McCann is a breath of fresh air. I've ordered her first novel, and may even get to read it before next summer.

It isn't the perfect novel. I did find that the ending fizzled a little. But it's a gripping read. McCann writes both beautifully and compellingly, which is not an easy double. The characters are appealing, particularly the young hero - who, by the end, is considerably more heroic than he himself recognises - and his parents, who you can't help but love as much as he does. It does the historical-novel thing unusually well: it captures lots of atmosphere and subtle detail, and much of the plot turns on one crucial incident from the Civil War, but most of it is as timeless as most human lives are. There are no attempts to dress a history lesson up as fiction, and no walk-on parts from big-name characters. And I challenge you to read it without thinking that you'd rather like a mug of cider.

Still, my point is not simply to plug it but to pick out a more unusual virtue. I think McCann manages to get her hero's religion right. He's not a particularly pious young man, but his character is underpinned by the assumed religious framework that pervades his life, from which he draws considerable strength, and which - briefly, at one pivotal moment - he openly questions. She doesn't draw attention to it very often. It simply seems normal, and it adds to his humanity.

Is it just me, or is successfully pulling this off in a historical novel really rare? I have read a series of historical novels recently, all of which I have enjoyed, all of which fall down on this point. Geraldine Brooks' Year of Wonders, another rollicking post-Civil-War read, ends with the heroine sloughing off her religion. Jill Paton Walsh's Knowledge of Angels, a much better book, is about the struggle and inevitable failure of a cleric to hold onto his faith when confronted with an alternative. Even the incomparable Wolf Hall and Bring Up the Bodies present Thomas Cromwell's religion as a species of modern secular scepticism.

I was beginning to wonder if the novel, as a genre, is capable of dealing effectively with characters who genuinely hold to and draw strength from their religion. Or is there a cult of individualism so deeply ingrained in it as a form that we can only construe adherence to truths beyond the self as a form of oppression? I struggle to think of many modern novels whose characters' religion is simply part of them (aside from those like Susan Howatch's Starbridge series, where that is the point). So McCann is a breath of fresh air. I've ordered her first novel, and may even get to read it before next summer.

Wednesday, 6 August 2014

Theologygrams

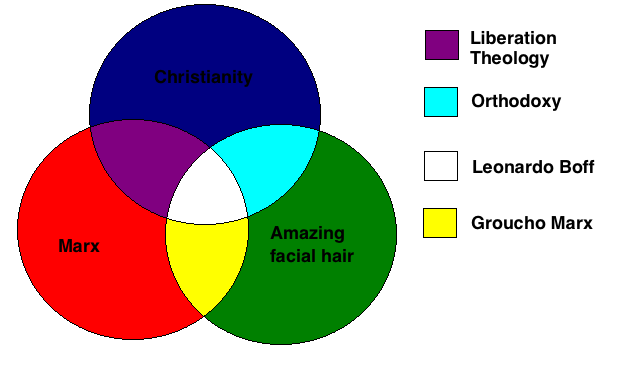

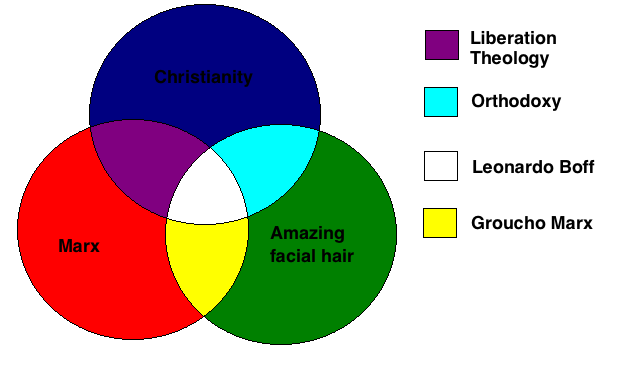

Ex-Durham theology students go on to do all kinds of wonderful things, but I've recently come across one of the more wonderful ones. Rich Wyld, formerly a doctoral student here, now a C of E parish priest in Dorset, runs a delightful blog called Theologygrams, which mixes some serious and perceptive points with ladlefuls of straight-faced silliness. And he now has a book out, which if you have the faintest taste for Biblically, theologically or mathematically based humour (any one of the three) will make you laugh out loud. Buy a job lot now in time for Christmas.

This one (below) isn't in the book but will give you a flavour!

This one (below) isn't in the book but will give you a flavour!

Wednesday, 23 July 2014

The job presentation

Having

blessed the world with my thoughts on the other stages of academic job-hunting,

I’ve been asked for views on this too. It is now standard for most academic

jobs in the UK to include a formal presentation followed by a Q&A, in

addition to the interview. Typically the presentation is open to all academic

staff and research students in the relevant Department. The audience will

usually give their feedback to the interview panel at the end of the process.

The panel isn’t bound by what the audience say, but they invariably take it

seriously, and most of the time end up agreeing with them.

Some

candidates self-destruct during the presentation, so much so that we only allow

them to finish and to come to the interview out of courtesy. Some candidates

ace the presentation so well that they nail the job there and then. From the

couple of dozen I’ve attended and chaired over recent years, some tips.

1.

Read the brief. Then, read the brief again. There is no standard form. You may,

especially for a junior or temporary post, be asked to perform a

teaching-related task, such as giving a lecture as if to a class of first-year

students. You may (this is the Durham standard) be asked to give a paper on an

aspect of your current or recent research. There may be some hybrid of the two,

or sometimes, for posts with particular requirements, something more specific

to that job. Make sure that you understand exactly what you’re being asked to

do. If you don’t understand it, ask for clarification. And, if you can do it without

being clunky, it can be useful to mention to the audience what your brief was:

it’s perfectly possible that they haven’t all been told.

2.

But also, don’t be fooled by the brief. They have asked you to do one task, but

they will use your performance in that to assess your all-round suitability. Typically,

the presentation is in practice used to assess both whether you are a good teacher and whether your research is high-quality. You may be asked to

give a research paper, but the audience want to know that you are a clear, engaging

and lively communicator who could safely be put in front of a room full of

undergraduates. You may be asked to give a beginners’ lecture, but the audience

want it to be fizzing with ideas and demonstrating your scholarly qualities

too. This double bind is infuriating but it is also one of the things which

makes the presentation such an effective test.

3.

Stick to time. I’m sorry this should have to be said, but it does. If you are

asked to speak for 20 minutes, ensure that you speak for between 18 and 21

minutes. Less is much better than

more.

4.

Visual aids: not essential but probably helpful. Of course it depends on the

task and your own style, but simply speaking from notes may be braver than you

need to be. But if you do it, do it well. PowerPoint presentations which simply

list your talking-points are rarely a good idea: they work better for pictures,

quotations or other material best delivered visually.

5.

If you do an AV presentation, especially an online one such as Prezi, be in

touch with the relevant person to ensure that it can be set up and tested in

advance. Never assume it’ll be all right. Personally I recommend the method by

which my old colleague Catherine Richardson helped to persuade us of her

unflappable omnicompetence: showing up with a complete set of handouts in your

bag in case the technology fails. It is a very nice demonstration that you are

a safe pair of hands.

6.

And anyway, don’t underrate the old-fashioned paper handout, which your

audience can digest much more easily than they can a succession of projected

slides, and which they can also take away with them. Get the organisers to

confirm the expected numbers present beforehand, and then add at least 50%.

Make your own copies and bring them if you possibly can: your hosts may offer

to make them but (I say with a hot pang of shame, having once as a host done

this to a candidate … sorry, again …) they may not actually do it. And don’t

forget to include your email address on a handout. Even if you don’t get the

job, you may make some worthwhile contacts.

7.

Regardless of what sort of visual aid you use, make it look good. It is worth

spending time on this, and indeed getting help from the people you know who are

better at it than you. Something with high production values pleases and

tickles and audience; it makes you look professional; and again, it reassures

them that you’ll be a competent lecturer.

8.

Proofread, both your visuals and what you will say. Slips in grammar and

punctuation will

always be noticed, and I am afraid they genuinely

do undermine academics’ confidence.

9. To read from

a text or to ad-lib? No clear answer, but this is one of the key questions. A

droning, dull or inaudible performance can sink you immediately. So can a

rambling, inarticulate, over-chatty or (I have heard this) half-shouted

performance. Reading allows you to control your text and your timings much

more, and also helps if you are nervous. Speaking from outline notes or even unprompted

can be much more engaging, but risks either stumbling or being over-glib. Do

what you are comfortable with, but if you have a full text, don’t forget that

this is a rhetorical performance, in which delivery and eye contact are

crucial, so rehearse it sufficiently that the text becomes little more than a

prompt. (It can be helpful to print it in a large point size.) If you are

ad-libbing, again, rehearse it often enough that you know what you are doing. If

you are worried about how you will come across, make a video recording of

yourself doing the presentation. It is excruciating, but an excellent way to

confront yourself with whatever aspect of your presenting style doesn’t work

very well.

10. Content is

harder to advise on. You will have a brief to follow and multiple boxes to

tick. But some suggestions. Unless you have to, try not to lapse into

autobiography. The presentation which begins ‘First I will give you an overview

of my research projects of the past five years …’ rarely stirs the blood. And

try to give the audience something to take away with them: one really striking

idea, discovery, image or interpretation which will be new to most of them, and

which will persuade them that you are a good teacher, a strong researcher and

an interesting colleague. Better to give them one thing in enough depth to show

your talents than to skate over too much too quickly: leave them wanting more.

11. You are, probably, not a comedian by training. But if you have a chance to make your audience smile, take it. Again it helps persuade them you are a good communicator. It makes them feel more warmly to you; and, if you are nervous, there is nothing like a few grins from your audience to help you to relax.

12. Questions

at the end. You can’t do much to prepare for these, but again they can make or sink

a candidate. Often it makes sense to answer the room, not just the individual

questioner: both to avoid getting caught on one weirdo’s hobby-horse, and to

help you broaden out a question which may be uncomfortably precise. Of course,

if you don’t know the answer, say so. But if you are directly challenged on

something, don’t be scared to fight back. One recent presentation in Durham

turned into quite a charged argument between the candidate and a lecturer about

an abstruse point of detail. The consensus afterwards was that, although the

lecturer had probably won that bout on points, the candidate had put up a good

fight. He got the job.

Sunday, 20 July 2014

Blood money

A last thought on the moral history of Atlantic slavery, before I move on to something happier.

The history of this subject is so focused on Britain and the United States - and not without reason - that we can lose sight of the wider comparisons. One striking fact from those comparisons which was new to me: in every Atlantic jurisdiction but one, when slavery was abolished, financial compensation of some sort was paid to the slave-holders. They were being deprived of what the law had until then recognised as 'property', and they needed to be compensated.

Which prompts two, contradictory thoughts.

First, while of course you can see why it happened at the time, this is grotesque. Rewarding people for ceasing to commit a terrible crime, well, yes, OK. It's a bit like giving an amnesty to a dictator who agrees to step down: it sticks in the craw, but if it's the price of getting a deal done, there's a case for it. But the implication that slavery was legitimate - that, in fact, all that has happened is that the state has used compulsory-purchase to acquire the slaves and then to free them - that's not very nice.

I can see an excellent case for compensation being paid when slavery was abolished. But not to the enslavers, for pity's sake! And the fact remains: neither enslaved people nor their descendants have been compensated in any jurisdiction for the awful crimes that were committed against them. And we all know that those crimes' after-effects are still being felt across the whole Atlantic world.

Who should pay? Perhaps, at the least, the inheritors of those who were paid compensation.

... But second. There was one case where no compensation was paid: the United States, where slavery was ended by war and by a dictated peace, in which slave-holders did not need to be appeased. America did not pay in money to free its slaves, it paid in blood. As Lincoln said, 'every drop of blood drawn with the lash shall be paid by another drawn with the sword', which is certainly more stirring and virtuous than paying the South off would have been.

But was it better? I don't know. If compensating slave-holders has a merit, it's this: the slave-holder who takes the money has consented, indeed bought into the new system. The white South, by contrast, took a century to accept the terms of the peace imposed on it in 1865. And since it couldn't be subject to indefinite military occupation, that meant that it quickly reverted to something which, while it wasn't slavery as such, wasn't exactly freedom either.

It couldn't have been any other way, of course. The South would never have accepted a deal to pay it for abolishing slavery, at least not until the North was in no mood to pay and had no need to. But in retrospect, almost anything that could have drawn some of the racial poison which still flows through America's veins would have been worth it.

The history of this subject is so focused on Britain and the United States - and not without reason - that we can lose sight of the wider comparisons. One striking fact from those comparisons which was new to me: in every Atlantic jurisdiction but one, when slavery was abolished, financial compensation of some sort was paid to the slave-holders. They were being deprived of what the law had until then recognised as 'property', and they needed to be compensated.

Which prompts two, contradictory thoughts.

First, while of course you can see why it happened at the time, this is grotesque. Rewarding people for ceasing to commit a terrible crime, well, yes, OK. It's a bit like giving an amnesty to a dictator who agrees to step down: it sticks in the craw, but if it's the price of getting a deal done, there's a case for it. But the implication that slavery was legitimate - that, in fact, all that has happened is that the state has used compulsory-purchase to acquire the slaves and then to free them - that's not very nice.

I can see an excellent case for compensation being paid when slavery was abolished. But not to the enslavers, for pity's sake! And the fact remains: neither enslaved people nor their descendants have been compensated in any jurisdiction for the awful crimes that were committed against them. And we all know that those crimes' after-effects are still being felt across the whole Atlantic world.

Who should pay? Perhaps, at the least, the inheritors of those who were paid compensation.

... But second. There was one case where no compensation was paid: the United States, where slavery was ended by war and by a dictated peace, in which slave-holders did not need to be appeased. America did not pay in money to free its slaves, it paid in blood. As Lincoln said, 'every drop of blood drawn with the lash shall be paid by another drawn with the sword', which is certainly more stirring and virtuous than paying the South off would have been.

But was it better? I don't know. If compensating slave-holders has a merit, it's this: the slave-holder who takes the money has consented, indeed bought into the new system. The white South, by contrast, took a century to accept the terms of the peace imposed on it in 1865. And since it couldn't be subject to indefinite military occupation, that meant that it quickly reverted to something which, while it wasn't slavery as such, wasn't exactly freedom either.

It couldn't have been any other way, of course. The South would never have accepted a deal to pay it for abolishing slavery, at least not until the North was in no mood to pay and had no need to. But in retrospect, almost anything that could have drawn some of the racial poison which still flows through America's veins would have been worth it.

Thursday, 19 June 2014

Slaves and extra miles

I've spent much of the last six weeks reading about Protestantism and Atlantic slavery, which is as ghastly a subject as I have ever researched. The scale and relentlessness of the horrors quickly render you numb. I am not surprised that so many slaveholders denied that their victims were human: if you are going to treat people that way, how else can you retain your own sanity?

But for all the ghastly atrocities, and the attitudes ranging from active malice to paternalistic bigotry which committed them, there are some stirring tales too. One of the most delightful is told in this exceptionally lovely book, Jon Sensbach's Rebecca's Revival.

That terrific picture on the cover tells half the story itself. It is a painting of a woman born with the name Shelly, who took the name Rebecca for herself, later adding her husbands' surnames. She was born on Antigua, probably as a slave, in about 1718; then kidnapped or sold to the Danish Caribbean island of St. Thomas, aged six or seven; kept as a house-slave by a Dutch family, who allowed her to be educated and baptised, and eventually freed her in her early teens. She became a linchpin of the Moravian mission to St Thomas ('everything depends on her', one of the missionaries wrote), married one of the missionaries, was imprisoned and nearly sold back into slavery. In the end, however, she went to Europe, remarried, and then spent many years running a mission school in West Africa. The painting is part of a family portrait from the 1740s. It is the face of a woman who has seen both hell and heaven; that smile is not meant lightly.

Incidentally, she also appears to be the first black African and perhaps also the first woman to be ordained in a Protestant church (she was made a Moravian deaconess in 1746, which entitled her to lay on hands to admit new members, and to preach to other women).

But for all the ghastly atrocities, and the attitudes ranging from active malice to paternalistic bigotry which committed them, there are some stirring tales too. One of the most delightful is told in this exceptionally lovely book, Jon Sensbach's Rebecca's Revival.

That terrific picture on the cover tells half the story itself. It is a painting of a woman born with the name Shelly, who took the name Rebecca for herself, later adding her husbands' surnames. She was born on Antigua, probably as a slave, in about 1718; then kidnapped or sold to the Danish Caribbean island of St. Thomas, aged six or seven; kept as a house-slave by a Dutch family, who allowed her to be educated and baptised, and eventually freed her in her early teens. She became a linchpin of the Moravian mission to St Thomas ('everything depends on her', one of the missionaries wrote), married one of the missionaries, was imprisoned and nearly sold back into slavery. In the end, however, she went to Europe, remarried, and then spent many years running a mission school in West Africa. The painting is part of a family portrait from the 1740s. It is the face of a woman who has seen both hell and heaven; that smile is not meant lightly.

Incidentally, she also appears to be the first black African and perhaps also the first woman to be ordained in a Protestant church (she was made a Moravian deaconess in 1746, which entitled her to lay on hands to admit new members, and to preach to other women).

It's a great book, written for a mass readership but scholarly, and I'd recommend it to anyone at a loss for a Christmas present. It does, though, come up against one of the persistent problems to do with Christianity and slavery. It is not so much that the Bible treats slavery as a fact of life, rather than an evil to be opposed - slavery was a fact of life in the premodern world. Rather, the Gospel ethic of non-resistance - turn the other cheek, go the extra mile - is strained to its limits by slavery, in which the moral authority of nonviolence is completely swallowed up by the slaveholders' expectations. Frederick Douglass, the escaped slave who became one of nineteenth century America's most powerful antislavery activists, described how he once fought back against his master; how he, astonishingly, escaped being killed for it; and how he then vowed never meekly to submit to punishment again. It was part of his own, complex alienation from Christianity; and who will say he was wrong?

But there is non-resistance and non-resistance. Sensbach's book mentions the case of a Moravian convert on St Thomas named Abraham, a slave, who became one of the leaders of the church on the island. During one of the planters' campaigns of intimidation against the Moravians, Abraham was attacked on the road one night, bound, and viciously beaten before being dumped at their church. Later, he carefully sent the ropes back to his attackers: along with a note apologising for the fact that they were a little torn and damaged.

UPDATE: Not quite the first ordained black African Protestant. The much more ambiguous, but equally interesting, Johannes Capitein beat her by four years. He was ordained in the Dutch Reformed church in 1742, the same year he published a book arguing for the legitimacy of slavery. His avowed reason was that, unless slaveholders were persuaded that missionaries were not a threat, they would never allow the Gospel to be preached to their chattels.

UPDATE: Not quite the first ordained black African Protestant. The much more ambiguous, but equally interesting, Johannes Capitein beat her by four years. He was ordained in the Dutch Reformed church in 1742, the same year he published a book arguing for the legitimacy of slavery. His avowed reason was that, unless slaveholders were persuaded that missionaries were not a threat, they would never allow the Gospel to be preached to their chattels.

Saturday, 7 June 2014

Academic discipline

I like the generally egalitarian and faintly anarchic atmosphere of British higher education, in which there is not too steep a power-gradient between academics and students, and something close to collegial equality between academics of different ranks. The stultifying hierarchies of some other systems I've met, or the sharp division between tenured and non-tenured academics, are not attractive.

And while British academics like to grumble about the consumerisation of higher education, as students start demanding more value for money in exchange for their fees, I'm not persuaded this is a bad thing. We should be in a position where students expect a lot from universities and universities expect a lot from students. My old friend Corey Ross, back in his days working on East Germany, used to say there was something late-Soviet about British higher education: students pretend to work, and staff pretend to teach them. We are mercifully leaving that behind.

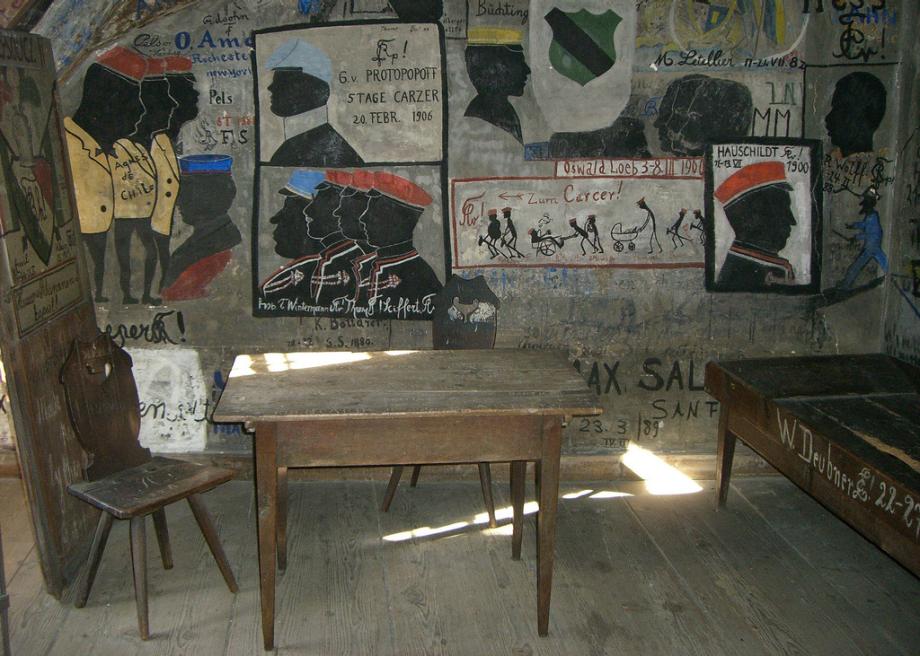

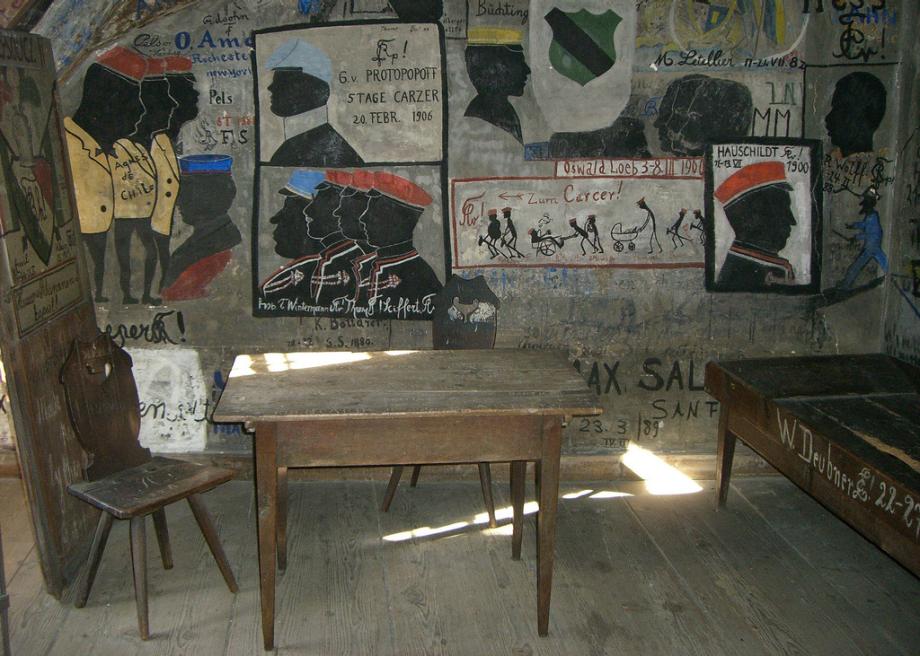

Still, I am reminded that another age achieved this in a different way, by this delightful article on the former student jail at Heidelberg University. Be honest, academic readers: doesn't a sliver of you wish your university had one?

But what really cheers me is the images of the jail itself, suggesting that the German academy is, or was, more anarchic than it is given credit for; or, perhaps, that the intellectual and political stimulation provided by jailing students is not to be underestimated.

And while British academics like to grumble about the consumerisation of higher education, as students start demanding more value for money in exchange for their fees, I'm not persuaded this is a bad thing. We should be in a position where students expect a lot from universities and universities expect a lot from students. My old friend Corey Ross, back in his days working on East Germany, used to say there was something late-Soviet about British higher education: students pretend to work, and staff pretend to teach them. We are mercifully leaving that behind.

Still, I am reminded that another age achieved this in a different way, by this delightful article on the former student jail at Heidelberg University. Be honest, academic readers: doesn't a sliver of you wish your university had one?

But what really cheers me is the images of the jail itself, suggesting that the German academy is, or was, more anarchic than it is given credit for; or, perhaps, that the intellectual and political stimulation provided by jailing students is not to be underestimated.

Wednesday, 21 May 2014

Scurvy knaves

Like most people, I learned the cautionary tales about scurvy as a child: how, before the nineteenth century, sailors, and sometimes even aristocrats, would develop symptoms moving from loss of appetite to skin spots, bleeding gums, loss of teeth and hair and eventual horrible death - and how various heroes, chiefly Captain Cook, solved the problem by prescribing such unsavoury diets as (depending on which children's book you read) a lemon a month, a raw onion a month or (which seems to have been more accurate) a regular dose of sauerkraut. No wonder Jellicoe couldn't beat the German navy.

But now, from a lovely book by Karen Ordahl Kupperman* on a forgotten, doomed colonial venture (the Puritan-sponsored colony on Providence Island, off the Honduran coast, from 1630-41) I learn about an alternative, earlier cure. A scandal arose in the colony in 1634, when a man named Floud, an indentured servant to Captain William Rudyerd, the colony's muster-master, was discovered to have died following a truly vicious whipping. Indentured servants were Englishmen and not slaves: you weren't supposed to do that sort of thing. The accusation was that Floud had complained to the colony's governor about the mistreatment he had already suffered at Captain Rudyerd's hands, provoking the further 'discipline' which killed him.

But Captain Rudyerd had an explanation: it was not a punishment at all. Floud, apparently, was developing scurvy (like many of the colonists). The whipping was intended as treatment.

The point is that one of scurvy's first symptoms is lethargy and difficulty in movement, later exacerbated by painful joints. At least, that's the way round we put it nowadays. Apparently many in the 17th century, perfectly logically, reversed the causation: scurvy is simply an extreme form of laziness, in which the moral defect has become so severe that the body breaks down. When you have a lazy servant, then, rigorously correcting his fault will both ensure you are better served, and also potentially save his life by reintroducing him to the virtues of hard labour. Rudyerd explained that he had 'used all fair means to prevent the Scurvy which through laziness was seizing upon him'.

In this case, the treatment was successful but the patient died. Whether this regime of treatment was more or less popular with victims than was sauerkraut is a subject on which we clearly need further research.

*Karen Ordahl Kupperman, Providence Island 1630-41: The Other Puritan Colony (Cambridge, 1993), p. 157.

But now, from a lovely book by Karen Ordahl Kupperman* on a forgotten, doomed colonial venture (the Puritan-sponsored colony on Providence Island, off the Honduran coast, from 1630-41) I learn about an alternative, earlier cure. A scandal arose in the colony in 1634, when a man named Floud, an indentured servant to Captain William Rudyerd, the colony's muster-master, was discovered to have died following a truly vicious whipping. Indentured servants were Englishmen and not slaves: you weren't supposed to do that sort of thing. The accusation was that Floud had complained to the colony's governor about the mistreatment he had already suffered at Captain Rudyerd's hands, provoking the further 'discipline' which killed him.

But Captain Rudyerd had an explanation: it was not a punishment at all. Floud, apparently, was developing scurvy (like many of the colonists). The whipping was intended as treatment.

The point is that one of scurvy's first symptoms is lethargy and difficulty in movement, later exacerbated by painful joints. At least, that's the way round we put it nowadays. Apparently many in the 17th century, perfectly logically, reversed the causation: scurvy is simply an extreme form of laziness, in which the moral defect has become so severe that the body breaks down. When you have a lazy servant, then, rigorously correcting his fault will both ensure you are better served, and also potentially save his life by reintroducing him to the virtues of hard labour. Rudyerd explained that he had 'used all fair means to prevent the Scurvy which through laziness was seizing upon him'.

In this case, the treatment was successful but the patient died. Whether this regime of treatment was more or less popular with victims than was sauerkraut is a subject on which we clearly need further research.

*Karen Ordahl Kupperman, Providence Island 1630-41: The Other Puritan Colony (Cambridge, 1993), p. 157.

Friday, 25 April 2014

The poetry of history

Molière's gentleman discovered that he'd been speaking prose all his life, but I think I may have discovered that I've been trying to write poetry. That, at least, is what I take from a lively paper at a conference yesterday hosted by Durham's Hearing the Voice project. Jules Evans spoke about poetry as a kind of shamanism, with reference to Ted Hughes in particular: the point being the experience that Hughes and other poets (not only poets) describe, that of entering an inner state of ecstasy, reverie or something similar, in which they can access voices or depths not normally available. These voices are not under the poet's control and are experienced as something other - an inspiration. What's more, poets, like shamans, are 'masters of both worlds': neither stuck lumpishly in this one, nor vanished so far into the inner world that they cannot come back. The poet is able not only to access these insights, visions or whatever, but also to bring them back to the everyday world and to fashion the experience into something that can be effectively communicated to others through an artistic medium. And the most accomplished wordsmith who does not travel into some inner world is not really a poet.

He concentrated on poetry, partly because of Hughes, partly because of Shelley's claim that poets are the world's unacknowledged legislators (which still sounds like sour grapes to me, but never mind). Still, at the end he asked if this poetic role was being taken by other media, film most obviously, which can invite the viewer into someone else's inner world.

I find this an appealing way to understand art defined very broadly, including the sort-of art-forms which I am involved with. The sermon can be understood in this way, I think, but I want to make a more outrageous claim: so can the best academic monographs. It made me realise that what I was trying to do in my most recent book, amongst other things, was to give voices to the dead: to converse with them and hear their critique of me and my own age, as well as to give mine of them and theirs.

No doubt I failed miserably, but I think this is part of what a good historian should be trying to do: to be master of both worlds. There is no substitute for the hard graft of scholarship: reading, deciphering, collating, checking, hunting, cross-referencing. History is after all a craft, and a rigorous one. But the history which I think is most worth reading, and the history which (however laughably) I aspire to write, is more than a craft. It genuinely hears the voice of its subjects, and draws its readers into their world - whether that world is a church, a battle, a parliament or a field.

Like all inspiration, this is not just hard to do: it can't actually be done at all. At best you can cultivate it. It happens or it doesn't; and then you make good use of it or you don't. We aren't used to thinking of scholarly achievement in those terms, and we don't train students to do so. Reverie and daydreaming don't have good presses. But I do recall as a doctoral student staring up blankly at the Bodleian Library's roof and seeing, again and again: Dominus illuminatio mea.

He concentrated on poetry, partly because of Hughes, partly because of Shelley's claim that poets are the world's unacknowledged legislators (which still sounds like sour grapes to me, but never mind). Still, at the end he asked if this poetic role was being taken by other media, film most obviously, which can invite the viewer into someone else's inner world.

I find this an appealing way to understand art defined very broadly, including the sort-of art-forms which I am involved with. The sermon can be understood in this way, I think, but I want to make a more outrageous claim: so can the best academic monographs. It made me realise that what I was trying to do in my most recent book, amongst other things, was to give voices to the dead: to converse with them and hear their critique of me and my own age, as well as to give mine of them and theirs.

No doubt I failed miserably, but I think this is part of what a good historian should be trying to do: to be master of both worlds. There is no substitute for the hard graft of scholarship: reading, deciphering, collating, checking, hunting, cross-referencing. History is after all a craft, and a rigorous one. But the history which I think is most worth reading, and the history which (however laughably) I aspire to write, is more than a craft. It genuinely hears the voice of its subjects, and draws its readers into their world - whether that world is a church, a battle, a parliament or a field.

Like all inspiration, this is not just hard to do: it can't actually be done at all. At best you can cultivate it. It happens or it doesn't; and then you make good use of it or you don't. We aren't used to thinking of scholarly achievement in those terms, and we don't train students to do so. Reverie and daydreaming don't have good presses. But I do recall as a doctoral student staring up blankly at the Bodleian Library's roof and seeing, again and again: Dominus illuminatio mea.

Thursday, 27 March 2014

Church Times 'Best Christian Books'

So I am told, as a Church Times reviewer, that they're doing a feature this autumn on 'the best 100 Christian books of all time'. And they want suggestions. The criteria are:

Works should be of enduring value, influential in their time or after it. The list will encompass fiction and nonfiction, theological scholarship and popular titles, authors ranging from St. Augustine to Eamon Duffy to C. S. Lewis. (The only suggestion we will not consider is the Bible, divinely inspired authorship constituting an unfair advantage.) Among the categories to consider: mission and ministry, church history, theology, prayer and spirituality, the Old and New Testaments, liturgy and worship, literature and apologetics.Now, there's a fun parlour game! What to nominate? I passed over the Prayer Book (which won't be short of friends) and Pilgrim's Progress (likewise). Here is my initial suggestion and rationale:

Philip Jacob Spener's Pia Desideria (1675). It's a short call to arms - or rather, to renewed piety: it was the book which kick-started Pietism, and is therefore indirectly (no, actually, pretty directly) responsible for modern Evangelicalism. What makes it so wonderful is that it combines two things which are almost never brought together. First, a moving call for moral and spiritual renewal: its insistence (which both Pietists and Evangelicals have too often forgotten) that to be a Christian means to follow Christ, not to be able to win doctrinal arguments. Against the hair-splitters and heresy hunters of his day, he warned that at the last judgement ‘we shall not be asked how learned we were’, but rather ‘how faithfully and with how childlike a heart we sought to further the kingdom of God’. And he added that if St. Paul were to try to follow one of the theological debates of the age, he would ‘understand only a little of what our slippery geniuses sometimes say’. BUT, he combines this preacherly idealism with a level-headed practicality about what should be done. In particular, he makes the revolutionary suggestion that Christians should not leave their spiritual fate in the hands of their ministers, nor of the political powers who usually dominated their churches, but rather take matters into their own hands. He recommended ‘the ancient and apostolic kind of church meetings’, that is, Bible study and discussion groups for mutual support and encouragement. Methodist bands and classes got the idea directly from him. It seems so normal now that it's hard to grasp how empowering and revolutionary it once was.I think Spener deserves top-100 billing. But maybe just because I've been working on it these last couple of months. What other obscure gems want rescuing?

Saturday, 15 March 2014

Surviving shortlisting

Back

in the day, when I was trying to get my foot on the first rung of the academic

jobs ladder, a more senior friend told me: once you start getting interviews,

you’re OK, because then you know your number will come up sooner or later. And

it’s true, more or less. But how do you get onto those shortlists?

I’m

shortlisting for four different posts this month. Typically there are twenty,

forty, a hundred applicants who needed to be whittled down to four for

interviewing. How do you make the cut? Some suggestions.

- Apply for the job that is advertised. No two academic jobs are exactly the same. Don’t just send a CV and a generic covering letter, which does not engage with the specifics of the institution you’re applying for and the particular requirements of the post. That’s one of the quickest ways to the bin.

- Write good English. I’m sorry, but it needs to be said. Most academic jobs have ‘effective communication’ or something of that kind in the person specifications. If a shortlisting panel is looking for reasons to exclude people (and usually, we are), this makes it easy for them. If English is not your native language, this is especially important. We can’t easily judge how fluent a non-native speaker will be, and for any job that requires communication with students this may be decisively important. If your written English is not flawless, have someone proofread your letter and CV for you.

- Remember that, in CV terms, less is often more. There are different CV cultures: in the USA, the comprehensive CV is often favoured, whereas in Britain we still tend to like the more selective one. But in any case, don’t include things for the sake of it. Maybe you have published thirty book reviews: if so, tell us that and perhaps list the journals for which you have reviewed, but don’t spend two pages giving us the full list. Or again, if you have non-academic achievements to highlight, be cautious. Make sure it’s relevant: often it is, often it isn’t.

- In particular: don’t fill your CV so full of detail that readers miss the good stuff. I have seen people who present lists of publications in purely chronological order, so you have to read down through all the book reviews and short notes to spot that, half-way down page 2, there is a major Yale University Press monograph. Make it easy for your readers to see what you need them to see.

- Don’t be ambiguous. Remember that panellists are suspicious and will interpret any ambiguity in the worst possible light. The most common case is where a publication is simply described as ‘forthcoming’. Forthcoming where and how? Is it something you are thinking of writing one day, is it appearing on a fixed date next month with a major academic press, or somewhere in between? Be precise: ‘under review with the Journal of Nonsense’, ‘under contract and due for submission on 31 December’, ‘in proof’, or whatever.

- Help readers to understand how good your work is. Panellists reading lists of publications are trying to work out if any of this stuff is any good. Where there are signals that you can send (such as publication in a major journal or by a major academic press), do so. If those signals need amplifying, do so: for example, if you have published in a competitive journal which some of the panel may not be familiar with, make sure that they know that that’s what it is. And if you want to quote from reviews, article referees’ reports, or whatever, do so: a nice phrase or two under an article can help persuade a panellist that this might be a quality application worth spending a tiny bit more time on.

- The same goes for your teaching. It is common to see CVs listing the names of courses or modules that applicants have taught. But those tell us very little. If you can, add something which indicates how good you were (feedback scores from students, for example) or which indicates the scale of what you were doing (so we know the difference between taking a few auxiliary classes and designing a whole lecture course from scratch).

- Don’t leave unexplained gaps. If there is a period in your CV of more than a few months when your movements are unaccounted for, people spot it and wonder – even though they shouldn’t. If you had a career break for family reasons; if you were in non-academic work for a period; or anything like that, say so. If you have something that you don’t want to reveal (like an extended period of ill health, a spell in prison, or what have you), then find a way of explaining the missing years which is not actively deceptive. But if we see someone who got their PhD ten years ago and spent eight years of the intervening time not publishing, the application will be binned unless the gap can be explained.

- Photos. Increasingly you see academic CVs with a cheesy photo of the applicant at the top. I know this is common in the business world. I really don’t like it: not just because some academics are off-putting to look at, but because it invites the panel to join the applicant in playing subliminal games of gender, age and racial politics. I don’t think it ever helps an application.

- And do try not to be actively weird or unintentionally funny. Most piles of applications contain one like this. One that springs to mind is the candidate who listed contact details for four referees, as requested, but added after one of the names: ‘(deceased)’. And then gave this person’s full postal address. Such moments help to brighten the committee’s day, but the CV that makes us laugh almost never makes the list.

UPDATE: See also my notes on how to survive the job presentation and on how to then self-destruct during the interview.

Subscribe to:

Comments (Atom)